1.前言

最近搭建性能测试环境,主机规格为三台8C16G(这个内存量确实比较尴尬)云主机。选用的部署方案是多分片三副本集的部署方式,以期在通过横向拓展扩展提升数据库整体吞吐的情况下,尽可能保证数据的高可用性。

但是实践发现,三分片三副本集的MongoDB集群,无法承载持续的大数据量写入。因此,经过调研,我们调整部署方案,MongoDB集群再添加两个节点去分担数据写入量。

2.原部署方案

原部署方案中,每个主机(主机A/B/C)上各自启动三个分片(shard1/shard2/shard3)实例,共9个实例(主机A/B/C上的shard1构成了一个副本集【Replica Set】)。

shard1分片实例参数如下所示:

systemLog:

destination: file

path: "/data/mongodb/shard1/log/shard1.log"

logAppend: true

logRotate: rename

processManagement:

fork: true

pidFilePath: "/data/mongodb/shard1/log/shard1.pid"

net:

bindIp: 0.0.0.0

port: 27001

maxIncomingConnections: 20000

setParameter:

enableLocalhostAuthBypass: true

storage:

dbPath: "/data/mongodb/shard1/data"

wiredTiger:

engineConfig:

cacheSizeGB: 3

journalCompressor: snappy

collectionConfig:

blockCompressor: snappy

indexConfig:

prefixCompression: true

sharding:

clusterRole: shardsvr

replication:

replSetName: shard1

security:

keyFile: "/opt/mongodb/mongo.key"shard2分片实例参数如下所示:

systemLog:

destination: file

path: "/data/mongodb/shard2/log/shard2.log"

logAppend: true

logRotate: rename

processManagement:

fork: true

pidFilePath: "/data/mongodb/shard2/log/shard2.pid"

net:

bindIp: 0.0.0.0

port: 27002

maxIncomingConnections: 20000

setParameter:

enableLocalhostAuthBypass: true

storage:

dbPath: "/data/mongodb/shard2/data"

wiredTiger:

engineConfig:

cacheSizeGB: 3

journalCompressor: snappy

collectionConfig:

blockCompressor: snappy

indexConfig:

prefixCompression: true

sharding:

clusterRole: shardsvr

replication:

replSetName: shard2

security:

keyFile: "/opt/mongodb/mongo.key"shard3分片实例参数如下所示:

systemLog:

destination: file

path: "/data/mongodb/shard3/log/shard3.log"

logAppend: true

logRotate: rename

processManagement:

fork: true

pidFilePath: "/data/mongodb/shard3/log/shard3.pid"

net:

bindIp: 0.0.0.0

port: 27003

maxIncomingConnections: 20000

setParameter:

enableLocalhostAuthBypass: true

storage:

dbPath: "/data/mongodb/shard3/data"

wiredTiger:

engineConfig:

cacheSizeGB: 3

journalCompressor: snappy

collectionConfig:

blockCompressor: snappy

indexConfig:

prefixCompression: true

sharding:

clusterRole: shardsvr

replication:

replSetName: shard3

security:

keyFile: "/opt/mongodb/mongo.key"3.失误

在添加新节点至MongoDB集群的时候,因为对于MongoDB的多分片副本集理解不深,误将shard1/shard2/shard3分片实例的配置文件原样拷贝至新的云主机上。更新mongo的分片配置:原来shard1/shard2/shard3的分片配置中,均包含云主机A/B/C三个成员。现在将shard1/shard2/shard3的分片配置中的成员更新为A/B/C/D/E共五个成员。

导入数据,经过一段时间的压测后发现,MongoDB集群的负载不仅未降低,反而CPU和网络负载均出现飙升的情况。此外,发现有两个节点的负载明显低于另外三个节点。经过思考,发现是对分片副本集的认知不够清晰。

我们想构建的是五分片MongoDB集群,其中每个分片由三个分片实例构成一个副本集;然而现在的情况是,MongoDB集群仍然是三分片,此外每个分片由五个分片实例组成。副本集的成员越多越好吗?

上图为典型的三成员副本集示意图。副本集由一个主节点,两个副节点构成,节点之间通过心跳完成选主操作,每个成员的数据基本保持一致。3个成员恰好满足副本集选主的最小成员数量限制,5个节点当然也可以完成选主,然而多出的两个节点,显著的增加了节点间的网络开销和IO开销,此外提供的数据安全性并未得到显著提升。

4.新部署方案

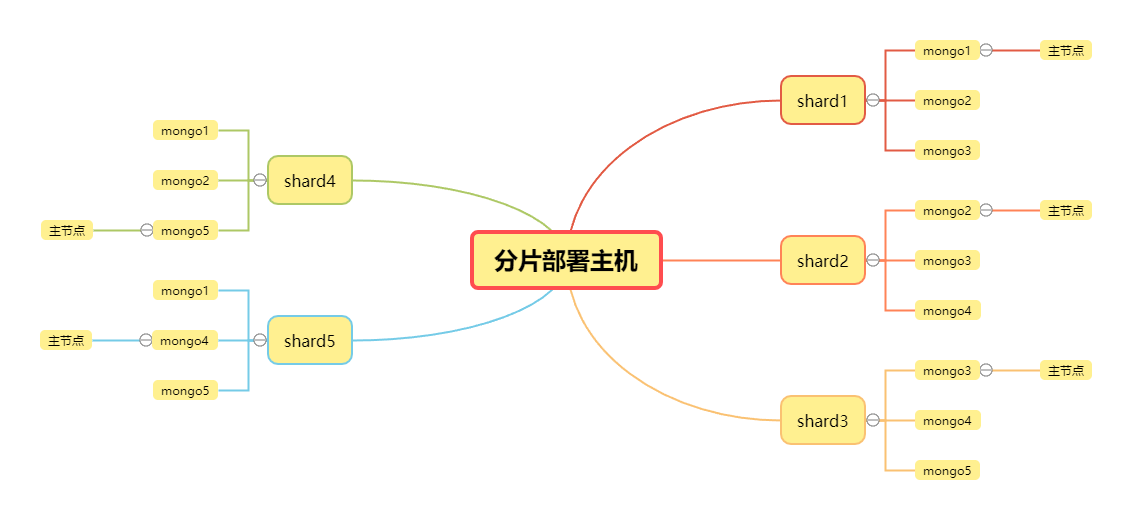

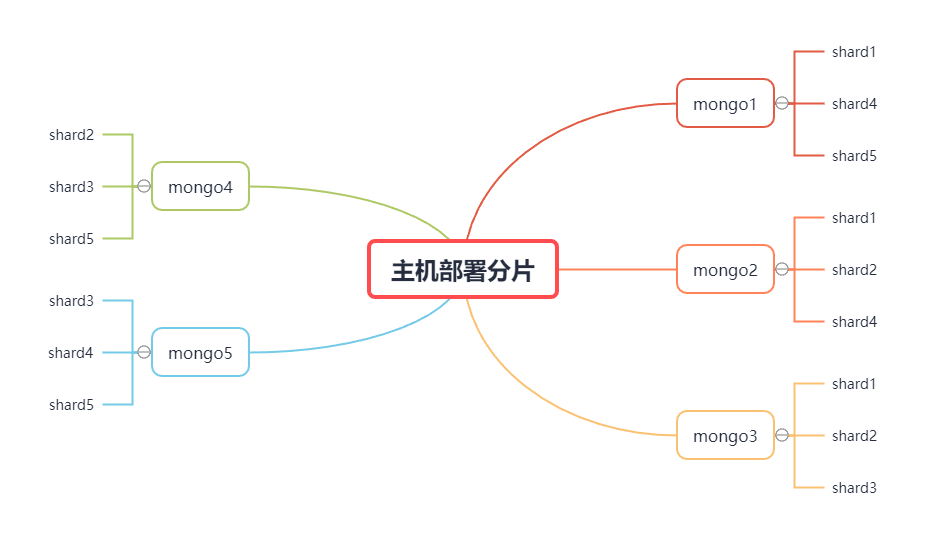

结合上文的失误分析,调整MongoDB集群的部署方案为5分片(每个分片为3成员副本集),总共存在5*3=15个分片实例。当前5个云主机的IP地址分别为:mongo1(10.14.2.2)、mongo2(10.14.2.11)、mongo3(10.14.2.26)、mongo4(10.14.2.6)以及mongo5(10.14.2.24)。

4.1 分片部署主机

4.2 主机部署分片

4.3 分片实例配置文件

上文已展示shard1/shard2/shard3的分片配置文件,此处仅补充展示shard4/shard5的配置文件:

systemLog:

destination: file

path: "/data/mongodb/shard4/log/shard4.log"

logAppend: true

logRotate: rename

processManagement:

fork: true

pidFilePath: "/data/mongodb/shard4/log/shard4.pid"

net:

bindIp: 0.0.0.0

port: 27004

maxIncomingConnections: 20000

setParameter:

enableLocalhostAuthBypass: true

storage:

dbPath: "/data/mongodb/shard4/data"

wiredTiger:

engineConfig:

cacheSizeGB: 3

journalCompressor: snappy

collectionConfig:

blockCompressor: snappy

indexConfig:

prefixCompression: true

sharding:

clusterRole: shardsvr

replication:

replSetName: shard4

security:

keyFile: "/opt/mongodb/mongo.key"shard5:

systemLog:

destination: file

path: "/data/mongodb/shard5/log/shard5.log"

logAppend: true

logRotate: rename

processManagement:

fork: true

pidFilePath: "/data/mongodb/shard5/log/shard5.pid"

net:

bindIp: 0.0.0.0

port: 27005

maxIncomingConnections: 20000

setParameter:

enableLocalhostAuthBypass: true

storage:

dbPath: "/data/mongodb/shard5/data"

wiredTiger:

engineConfig:

cacheSizeGB: 3

journalCompressor: snappy

collectionConfig:

blockCompressor: snappy

indexConfig:

prefixCompression: true

sharding:

clusterRole: shardsvr

replication:

replSetName: shard5

security:

keyFile: "/opt/mongodb/mongo.key"5.部署

5.1 configServer

五个节点均启动configServer

# 五个节点均启动config server

/opt/mongodb/bin/mongod -f /data/mongodb/configserver/conf/configserver.conf选取一个节点初始化

# 执行此命令进入config server

/opt/mongodb/bin/mongo --port 27000

# 输入如下配置

use admin

config={_id:"cfgsvr",members:[{_id:0,host:"10.14.2.2:27000"},{_id:1,host:"10.14.2.6:27000"},{_id:2,host:"10.14.2.11:27000"},{_id:3,host:"10.14.2.24:27000"},{_id:4,host:"10.14.2.26:27000"}]}

rs.initiate(config)

# 退出config server

exit5.2 shard

五个节点一次启动shard实例

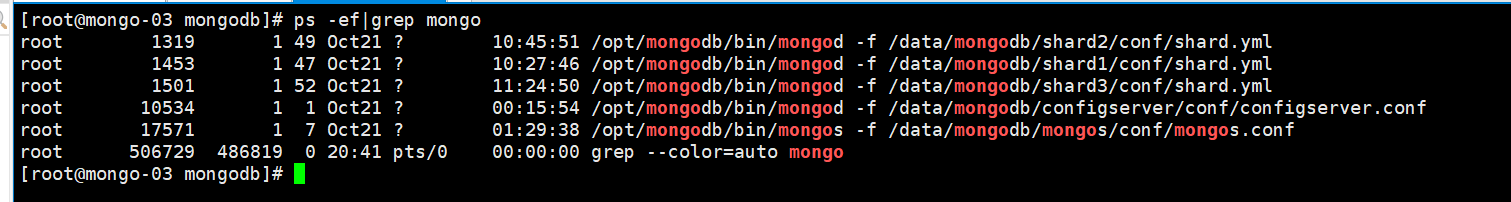

参照4.1和4.2章节描述的部署方案,在各云主机上启动对应的shard实例:

/opt/mongodb/bin/mongod -f /data/mongodb/{{ shard1/shard2/shard3/shard4/shard5 }}/conf/shard.conf执行分片初始化

以shard1为例,其分片集部署于momg1、mongo2、mongo3主机上,实例监听端口为27001,因此可选择在momg1、mongo2、mongo3任一主机上进行初始化,具体步骤如下所示:

# 执行此命令进入mongo应用

/opt/mongodb/bin/mongo --port 27001

use admin

# 将shard1副本集成员的信息列入配置中

config={_id:"shard1",members:[{_id:0,host:"10.14.2.2:27001"},{_id:1,host:"10.14.2.11:27001"},{_id:2,host:"10.14.2.26:27001"}]}

# 初始化shard1的副本集配置

rs.initiate(config)

# 退出分片配置

exit初始化后结果如下所示(执行rs.status()):

{

"set" : "shard1",

"date" : ISODate("2023-10-22T14:38:15.527Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"majorityVoteCount" : 2,

"writeMajorityCount" : 2,

"votingMembersCount" : 3,

"writableVotingMembersCount" : 3,

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1697985495, 8699),

"t" : NumberLong(1)

},

"lastCommittedWallTime" : ISODate("2023-10-22T14:38:15.521Z"),

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1697985495, 8699),

"t" : NumberLong(1)

},

"readConcernMajorityWallTime" : ISODate("2023-10-22T14:38:15.521Z"),

"appliedOpTime" : {

"ts" : Timestamp(1697985495, 8701),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1697985495, 7160),

"t" : NumberLong(1)

},

"lastAppliedWallTime" : ISODate("2023-10-22T14:38:15.525Z"),

"lastDurableWallTime" : ISODate("2023-10-22T14:38:15.446Z")

},

"lastStableRecoveryTimestamp" : Timestamp(1697985479, 755),

"electionCandidateMetrics" : {

"lastElectionReason" : "electionTimeout",

"lastElectionDate" : ISODate("2023-10-21T15:40:04.226Z"),

"electionTerm" : NumberLong(1),

"lastCommittedOpTimeAtElection" : {

"ts" : Timestamp(0, 0),

"t" : NumberLong(-1)

},

"lastSeenOpTimeAtElection" : {

"ts" : Timestamp(1697902793, 1),

"t" : NumberLong(-1)

},

"numVotesNeeded" : 2,

"priorityAtElection" : 1,

"electionTimeoutMillis" : NumberLong(10000),

"numCatchUpOps" : NumberLong(0),

"newTermStartDate" : ISODate("2023-10-21T15:40:04.259Z"),

"wMajorityWriteAvailabilityDate" : ISODate("2023-10-21T15:40:04.730Z")

},

"members" : [

{

"_id" : 0,

"name" : "10.14.2.2:27001",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 85326,

"optime" : {

"ts" : Timestamp(1697985495, 8701),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2023-10-22T14:38:15Z"),

"lastAppliedWallTime" : ISODate("2023-10-22T14:38:15.525Z"),

"lastDurableWallTime" : ISODate("2023-10-22T14:38:15.446Z"),

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1697902804, 1),

"electionDate" : ISODate("2023-10-21T15:40:04Z"),

"configVersion" : 2,

"configTerm" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "10.14.2.11:27001",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 82701,

"optime" : {

"ts" : Timestamp(1697985493, 10044),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1697985493, 10044),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2023-10-22T14:38:13Z"),

"optimeDurableDate" : ISODate("2023-10-22T14:38:13Z"),

"lastAppliedWallTime" : ISODate("2023-10-22T14:38:15.525Z"),

"lastDurableWallTime" : ISODate("2023-10-22T14:38:15.521Z"),

"lastHeartbeat" : ISODate("2023-10-22T14:38:13.677Z"),

"lastHeartbeatRecv" : ISODate("2023-10-22T14:38:14.926Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncSourceHost" : "10.14.2.2:27001",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 2,

"configTerm" : 1

},

{

"_id" : 2,

"name" : "10.14.2.26:27001",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 82701,

"optime" : {

"ts" : Timestamp(1697985493, 8307),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1697985493, 8290),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2023-10-22T14:38:13Z"),

"optimeDurableDate" : ISODate("2023-10-22T14:38:13Z"),

"lastAppliedWallTime" : ISODate("2023-10-22T14:38:15.521Z"),

"lastDurableWallTime" : ISODate("2023-10-22T14:38:15.521Z"),

"lastHeartbeat" : ISODate("2023-10-22T14:38:13.539Z"),

"lastHeartbeatRecv" : ISODate("2023-10-22T14:38:14.560Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncSourceHost" : "10.14.2.2:27001",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 2,

"configTerm" : 1

}

],

"ok" : 1,

"$gleStats" : {

"lastOpTime" : Timestamp(0, 0),

"electionId" : ObjectId("7fffffff0000000000000001")

},

"lastCommittedOpTime" : Timestamp(1697985495, 8699),

"$configServerState" : {

"opTime" : {

"ts" : Timestamp(1697985494, 11154),

"t" : NumberLong(1)

}

},

"$clusterTime" : {

"clusterTime" : Timestamp(1697985495, 8701),

"signature" : {

"hash" : BinData(0,"XFmpixBFlUpxxzT08huIKV7BGkM="),

"keyId" : NumberLong("7292435404353962007")

}

},

"operationTime" : Timestamp(1697985495, 8701)

}通过上面的输出,可知shard1的成员信息(参见members字段内容),其中10.14.2.2:27001对应的成员为主节点(state字段值为1)。

5.3 mongos

# 进入mongos设置

/opt/mongodb/bin/mongo --port 30000 << EOF

use admin

# 此处仅展示添加shard4分片,其余shard类似,不再赘述

sh.addShard("shard4/10.14.2.11:27004,10.14.2.2:27004,10.14.2.24:27004")6.副本集主节点调整

在初始化分片配置的过程中,无法设定主节点所在的位置,因此可能需要手动调整副本集的主节点。

例如shard4,其部署于mongo1、mongo2以及mongo4节点上,其中预期shard4的主节点为mongo4,但是经过副本集成员内部投票,发现投票选出的主节点为mongo1(10.14.2.2)。参照章节4,可发现mongo1应该是shard1副本集的主节点,因此对shard4的主节点进行调整。

调整前(rs.status()):

{

"set" : "shard4",

"date" : ISODate("2023-10-22T14:44:34.708Z"),

"myState" : 2,

"term" : NumberLong(1),

"syncSourceHost" : "10.14.2.2:27004",

"syncSourceId" : 0,

"heartbeatIntervalMillis" : NumberLong(2000),

"majorityVoteCount" : 2,

"writeMajorityCount" : 2,

"votingMembersCount" : 3,

"writableVotingMembersCount" : 3,

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1697985874, 1138),

"t" : NumberLong(1)

},

"lastCommittedWallTime" : ISODate("2023-10-22T14:44:34.704Z"),

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1697985874, 1138),

"t" : NumberLong(1)

},

"readConcernMajorityWallTime" : ISODate("2023-10-22T14:44:34.704Z"),

"appliedOpTime" : {

"ts" : Timestamp(1697985874, 1138),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1697985874, 1138),

"t" : NumberLong(1)

},

"lastAppliedWallTime" : ISODate("2023-10-22T14:44:34.704Z"),

"lastDurableWallTime" : ISODate("2023-10-22T14:44:34.704Z")

},

"lastStableRecoveryTimestamp" : Timestamp(1697985847, 562),

"electionParticipantMetrics" : {

"votedForCandidate" : true,

"electionTerm" : NumberLong(1),

"lastVoteDate" : ISODate("2023-10-21T15:50:17.829Z"),

"electionCandidateMemberId" : 0,

"voteReason" : "",

"lastAppliedOpTimeAtElection" : {

"ts" : Timestamp(1697903406, 1),

"t" : NumberLong(-1)

},

"maxAppliedOpTimeInSet" : {

"ts" : Timestamp(1697903406, 1),

"t" : NumberLong(-1)

},

"priorityAtElection" : 1,

"newTermStartDate" : ISODate("2023-10-21T15:50:17.856Z"),

"newTermAppliedDate" : ISODate("2023-10-21T15:50:18.141Z")

},

"members" : [

{

"_id" : 0,

"name" : "10.14.2.2:27004",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 82467,

"optime" : {

"ts" : Timestamp(1697985874, 1008),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1697985874, 877),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2023-10-22T14:44:34Z"),

"optimeDurableDate" : ISODate("2023-10-22T14:44:34Z"),

"lastAppliedWallTime" : ISODate("2023-10-22T14:44:34.651Z"),

"lastDurableWallTime" : ISODate("2023-10-22T14:44:34.555Z"),

"lastHeartbeat" : ISODate("2023-10-22T14:44:34.655Z"),

"lastHeartbeatRecv" : ISODate("2023-10-22T14:44:34.623Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1697903417, 1),

"electionDate" : ISODate("2023-10-21T15:50:17Z"),

"configVersion" : 2,

"configTerm" : 1

},

{

"_id" : 1,

"name" : "10.14.2.11:27004",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 84982,

"optime" : {

"ts" : Timestamp(1697985874, 1138),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2023-10-22T14:44:34Z"),

"lastAppliedWallTime" : ISODate("2023-10-22T14:44:34.704Z"),

"lastDurableWallTime" : ISODate("2023-10-22T14:44:34.704Z"),

"syncSourceHost" : "10.14.2.2:27004",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 2,

"configTerm" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 2,

"name" : "10.14.2.24:27004",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 82467,

"optime" : {

"ts" : Timestamp(1697985872, 1254),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1697985872, 1254),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2023-10-22T14:44:32Z"),

"optimeDurableDate" : ISODate("2023-10-22T14:44:32Z"),

"lastAppliedWallTime" : ISODate("2023-10-22T14:44:32.711Z"),

"lastDurableWallTime" : ISODate("2023-10-22T14:44:32.711Z"),

"lastHeartbeat" : ISODate("2023-10-22T14:44:32.740Z"),

"lastHeartbeatRecv" : ISODate("2023-10-22T14:44:34.045Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncSourceHost" : "10.14.2.2:27004",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 2,

"configTerm" : 1

}

],

"ok" : 1,

"$gleStats" : {

"lastOpTime" : Timestamp(0, 0),

"electionId" : ObjectId("000000000000000000000000")

},

"lastCommittedOpTime" : Timestamp(1697985874, 1138),

"$configServerState" : {

"opTime" : {

"ts" : Timestamp(1697985873, 932),

"t" : NumberLong(1)

}

},

"$clusterTime" : {

"clusterTime" : Timestamp(1697985874, 1138),

"signature" : {

"hash" : BinData(0,"psv7EfqCNX8d+vaYsgnV8IaDJjA="),

"keyId" : NumberLong("7292435404353962007")

}

},

"operationTime" : Timestamp(1697985874, 1138)

}调整步骤:

shard4:PRIMARY> cfg =rs.conf()

{

"_id" : "shard4",

"version" : 2,

"term" : 1,

"protocolVersion" : NumberLong(1),

"writeConcernMajorityJournalDefault" : true,

"members" : [

{

"_id" : 0,

"host" : "10.14.2.11:27004",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 1,

"host" : "10.14.2.2:27004",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 2,

"host" : "10.14.2.24:27004",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"catchUpTimeoutMillis" : -1,

"catchUpTakeoverDelayMillis" : 30000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" : ObjectId("6536213d7a6185af45ff5fce")

}

}

shard4:PRIMARY> cfg.members[0].priority = 0.5

0.5

shard4:PRIMARY> cfg.members[1].priority = 0.5

0.5

shard4:PRIMARY> cfg.members[2].priority = 0.5

0.5

shard4:PRIMARY> cfg.members[2].priority = 1

1

shard4:PRIMARY> rs.reconfig(cfg)

{

"ok" : 1,

"$gleStats" : {

"lastOpTime" : {

"ts" : Timestamp(1698047879, 1),

"t" : NumberLong(1)

},

"electionId" : ObjectId("7fffffff0000000000000001")

},

"lastCommittedOpTime" : Timestamp(1698047880, 32389),

"$configServerState" : {

"opTime" : {

"ts" : Timestamp(1698047880, 17262),

"t" : NumberLong(1)

}

},

"$clusterTime" : {

"clusterTime" : Timestamp(1698047881, 501),

"signature" : {

"hash" : BinData(0,"XBN4dXvgdmlyoci2jGe1UxWoUwE="),

"keyId" : NumberLong("7292435404353962007")

}

},

"operationTime" : Timestamp(1698047879, 1)

}执行以上命令,即可设置mongo5(10.14.2.24)为shard4的主节点。